No, Bayesians don't think all parameters are random

Will Lowe (2016-05-03 16:34)

Pretty regularly - usually in the middle of one of those interminable fixed-vs-random effects discussions - someone will pipe up that “Of course, for Bayesians this random vs fixed effect distinction makes no sense because all parameters are random”.

To the extent it can be made to make sense, the claim is false. It’s also unhelpful because it’s pretty much guaranteed to confuse and put-off people who have better things to do than pay attention to arguments in statistics.

But on the off chance you have a moment for one of those, let me try to disentangle things.

What is it to be random?

Before getting going it’s useful to say what non-Bayesians mean by ‘random’. As far as I can tell the basic picture is this: for a quantity to be random it must be the outcome of some kind of sampling process applied to a population. Quantities that are random in this sense can be assigned probability distributions and quantities that are not, cannot. Logically it’s an ‘if and only if’

From these premises it’s clear why Bayesians, with their willingness to put probability distributions on anything that’s uncertain, including all kinds of things that are not the result of any sampling process, can be confusing. Logically, for Bayesians there’s only an ‘if’.

Sampling implies distribution, but so do a bunch of other things.

So when a non-Bayesian claims that Bayesians assume everything to be random, what they typically mean is that Bayesians assume everything is the result of some kind of sampling process. That’s false.

And when a non-Bayesian claims that Bayesians assume everything to be random, all the Bayesians nod their heads because they really do like use probability distributions to represent their uncertainty about things.

Hilarity seldom ensues.

The second part of the argument is that there is no useful distinction between what the non-Bayesian calls ‘random’ and ‘fixed’ effects. Because ‘everything is random’. Since that claim’s not true in the relevant sense, it shouldn’t be surprising that this claim is false too.

The easiest way to show how is to reconstruct fixed and random effects in a Bayesian framework so we can show what the difference amounts to.

We’ll do it like this. The next section is a reminder of how and why Bayesians give probability distributions to things that are not sampled, and the one after draws the ‘fixed’ vs ‘random’ distinction.

Simple linear regression

Here’s a simple story about how comes to take the value it has, as a function of For convenience, assume is randomly assigned, is Normal with variance , and that we know somehow or other that the form of the model is correct.

Now put a Bayes hat on. What does the new headgear commit us to?

With Bayes we start with a prior over all unknown quantities, quietly assuming the model above, and condition on data to get a posterior over the unknowns. I shall assume you know broadly how this process works. For current purposes let’s look more closely into the prior.

The simplest form of prior factors into a prior over , a prior over , and a prior over . Consider which for concreteness we’ll model with a Normal having mean and variance . OK. Are we now committed to the idea that is ‘random’ in the sense of having a probability distribution? Obviously we are. The probability that takes some value is given by the distribution on the right hand side

Are we committed to the idea that is ‘random’ in the the Frequentist sense of being the result of some sampling process? Or that there are a lot of possible s out there of which this is just one. No, we are not.

The prior is just a precise expression of what kinds of values are plausible for the (single, fixed, determinate) mentioned in the model. In words: a best guess for that value is but there’s uncertainty to the tune of .

But wait, there’s more: if were the result of a sampling process and we were interested in, say, the mean of the population of s from which it was a sample, then this prior would not reflect that information and we’d want a different one.

What would such a thing look like? Read on.

Less simple linear regression

Consider now the same linear regression of on but now observed within groups We suspect that there are things about those groups that affects that we do not want to mistake for the effect of so we want to control for those things. ‘Unobserved heterogeneity’ is the technical term. But let’s say that, apart from the grouping business, everything is still linear, conditionally Normal with constant variance and all the good things. In particular we’ll maintain the assumption that is randomly assigned, which is now actually important for avoiding the tiring ‘what if individual errors are correlated with group fixed effects’ conversation before settling the current ‘random parameters’ question. We can deal with that quite easily, as it happens (Keyword: ‘Mundlak’, Reference: Bell and Jones 2015)

Now that there are groups, it’s traditional to wonder whether we want to think of these J group level intercepts as ‘fixed’ or ‘random’. How would we reconstruct this question with Bayes?

Let’s take a look at the s. We can, if we like, think of as distinct unrelated parameters just like only with subscripts. On those assumptions, plus Normality, a suitable prior for might be and for all the intercepts Notice that each intercept gets its own mean and variance. Since this is a product, learning about the intercept of some group j won’t be informative about, and so won’t affect inferences about any other group’s intercept. Similarly, need not equal , and each group’s variances might also be different, if we were more certain about the values of some intercepts than others.

As far as I can tell, this product structure in the prior is all the assumption of ‘fixed effects’ amounts to in a Bayesian framework.

Is random in this model? Nope. Not any more than was. Each intercept represents the determinate but unobserved attributes of group that affect This prior simply expresses the idea that learning about one intercept is not informative about any of the others.

Same model; different prior

What if these groups were a random sample, perhaps one selected by the analyst from a wider population? Then a more natural prior would be The s are now ‘random’ in the sense that they are a sample from a population.

Note that there are now only 2 parameters of interest governing this population prior rather than , in the previous prior. We could put a prior on them the same as we did with , and do the Bayes all over again, just one turtle down the stack.

So are the s random? Yes they are, in everybody’s sense.

As an aside, if we weren’t being Bayesian we’d estimate them directly, with REML or somesuch. It turns out that unreconstructed Maximum Likelihood works rather badly for , but that’s another story that won’t exercise us here. You can find it in Elff et al. 2020, among other places.

Exchangeability, but no sampling

We noted above that sampling implies distribution, but so do a bunch of other things. Here’s a particularly interesting one of those things:

If the groups, or rather their intercepts, are exchangeable, meaning if the distribution of the sorts of values you think all the intercepts could have isn’t altered by someone switching their subscripts, then there is - in the de Finetti Representation Theorem sense of ‘is’ - a distribution from which they come, that looks just like the prior above.

This is simply the mathematical form of the intuitive idea that groups should be informative about each other, and not a claim about any sampling that might have happened.

This was way too long and I skimmed the middle bit. What is your pithy takeaway?

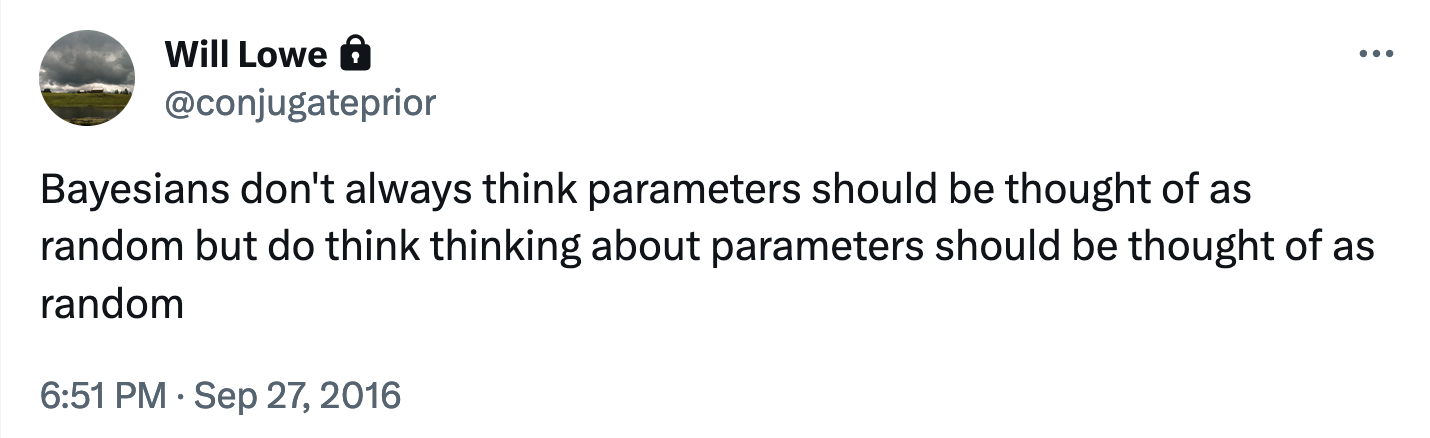

Back in 2016 I got it into 140 characters, but if I’d have put this at the top, you’d never have come so far down the page:

Original Twitter link, now probably inaccessible.